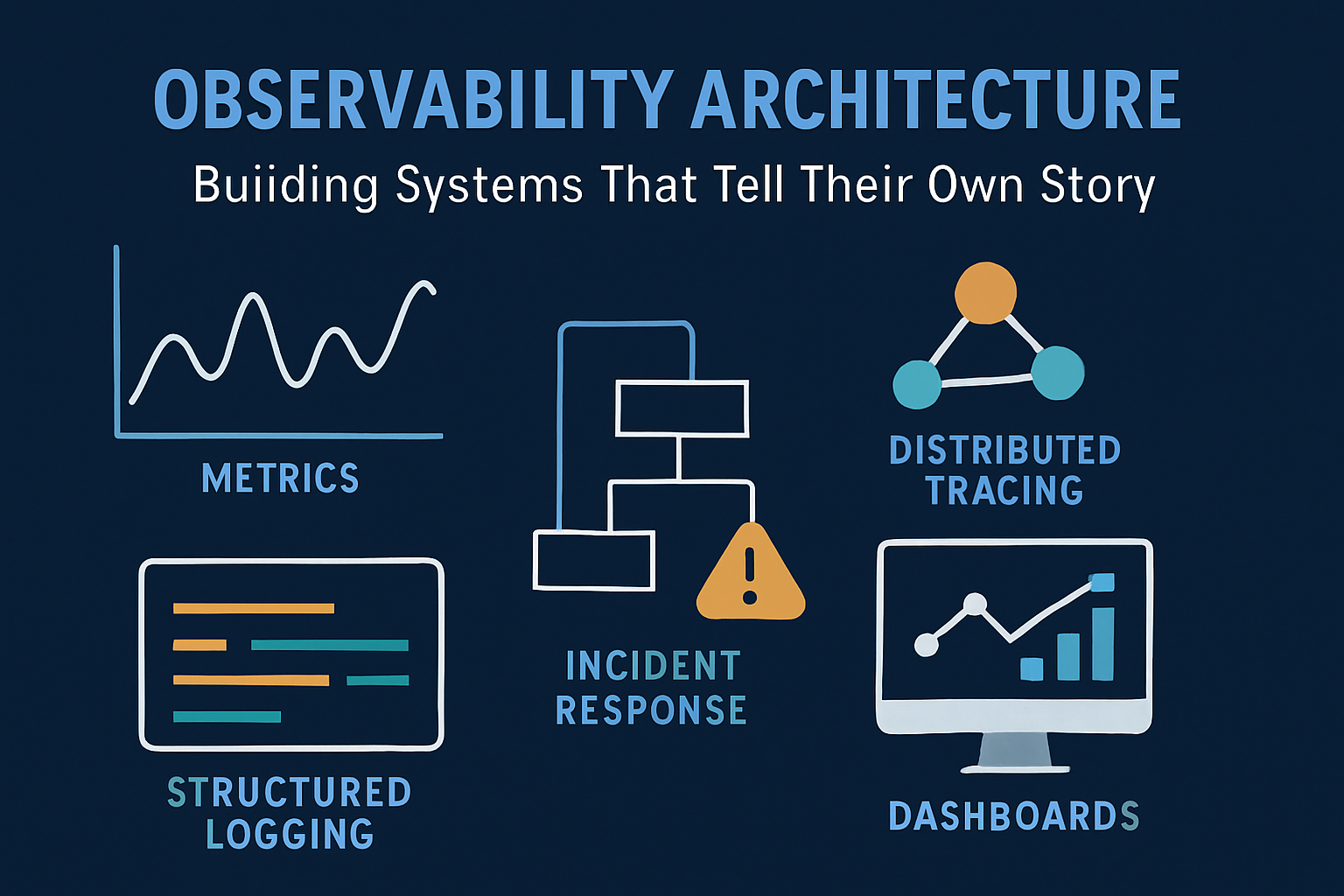

Observability Architecture: Building Systems That Tell Their Own Story

A Strategic Guide to Intelligence-Driven Operations

Most teams think observability is about collecting metrics and logs. Senior engineers understand it's about designing systems that can explain their own behavior under any conditions—especially when things go wrong.

The difference isn't just technical depth; it's architectural thinking. While monitoring tells you what is happening, observability enables you to understand why it's happening, even for scenarios you've never seen before.

After architecting observability platforms for systems processing billions of events daily, the pattern is clear: organizations that treat observability as "monitoring plus logging" get visibility into known problems. Those that approach it as a first-class architectural concern build systems that enable proactive reliability.

Beyond Monitoring: The Observability Paradigm Shift

The Strategic Problem

Traditional monitoring assumes you know what will break. You set up alerts for CPU usage, memory consumption, disk space—the predictable failure modes. But in distributed systems with hundreds of microservices, the interesting failures are emergent behaviors you couldn't have predicted.

Consider this scenario: Your payment service starts failing, but CPU and memory look normal. Database connections are healthy. Network latency is fine. Traditional monitoring shows green across the board, yet customers can't complete purchases.

Observability enables you to ask arbitrary questions: "Show me all requests that took longer than 500ms, broken down by user tier, payment method, and geographic region, correlated with deployment events from the last hour." Without pre-defined dashboards or alerts.

This is the paradigm shift: from known-unknowns to unknown-unknowns.

The Business Case for Observability

Organizations that implement comprehensive observability architectures typically see:

- 70-85% reduction in mean time to resolution (MTTR)

- 60% fewer escalations to senior engineers

- 40-50% improvement in customer satisfaction during incidents

- 3-5x faster feature delivery cycles due to confident deployments

More importantly, they shift from reactive firefighting to predictive engineering.

The Three Pillars Architecture

Enterprise observability rests on three interconnected pillars that together create a complete picture of system behavior.

Metrics: The Quantitative Foundation

Metrics provide the quantitative backbone of observability—high-cardinality, time-series data that enables both real-time alerting and historical analysis.

Strategic Pattern: Business-Contextual Metrics

# Focus on business impact, not just technical metrics

payment_requests_total = Counter(

'payment_requests_total',

'Total payment requests processed',

['method', 'currency', 'user_tier', 'result'] # Business dimensions

)

Key Insight: The most powerful metrics combine technical measurements with business context. Instead of just measuring "requests per second," measure "revenue-generating requests per second by customer tier."

Structured Logging: The Contextual Layer

While metrics provide quantitative data, logs provide the qualitative context that explains system behavior.

Strategic Pattern: Correlation-Driven Logging

{

"timestamp": "2024-01-15T10:30:00Z",

"level": "error",

"service": "payment-service",

"trace_id": "abc123def456",

"user_id": "user_789",

"business_impact": "revenue_loss",

"message": "Payment gateway timeout",

"context": {

"payment_method": "credit_card",

"amount": 99.99,

"retry_count": 2

}

}

Key Insight: Every log entry should answer three questions: What happened? Why does it matter to the business? How can we correlate it with other events?

Distributed Tracing: The Correlation Engine

Distributed tracing provides end-to-end visibility across service boundaries, enabling you to understand request flows through complex systems.

Strategic Pattern: Business Journey Mapping

Key Insight: Traces should follow business workflows, not just technical call chains. Map user journeys, not service dependencies.

Service Level Objectives: The Reliability Contract

SLOs bridge the gap between business requirements and technical implementation, providing objective criteria for system reliability.

The Strategic Framework

Instead of: "Our service should be fast and reliable" Define: "Payment processing should complete successfully 99.5% of the time, with 95th percentile latency under 500ms, measured over 30-day rolling windows"

Error Budget as Operational Currency

Error budgets transform reliability from a philosophical debate into an operational tool:

- 100% uptime = No feature development (risk aversion kills innovation)

- Error budget remaining = Permission to deploy new features

- Error budget exhausted = Focus shifts to reliability improvements

Strategic Decision Framework:

- Error budget > 50%: Accelerate feature development

- Error budget 10-50%: Balanced development and reliability investment

- Error budget < 10%: Halt non-critical releases, focus on stability

Advanced Alerting: Signal vs. Noise

The Operational Challenge

Most alerting systems generate noise masquerading as signal. Teams become desensitized to alerts, leading to the dangerous pattern of "alert fatigue."

Common Anti-Pattern: Alert on every metric deviation Strategic Pattern: Alert on business impact and error budget consumption

Multi-Signal Alert Composition

Key Insight: Don't alert on symptoms; alert on business impact and predictive indicators.

Dashboard Strategy: From Data to Insights

Audience-Driven Design

Business Stakeholders need:

- Revenue impact and customer experience metrics

- SLO compliance and error budget status

- High-level system health indicators

Engineering Teams need:

- Service dependency maps and performance bottlenecks

- Error patterns and debugging context

- Infrastructure utilization and capacity planning

SRE Teams need:

- Real-time incident response data

- Historical reliability trends

- Alert correlation and context

The Golden Signals Plus Business Context

Traditional "Golden Signals" (latency, traffic, errors, saturation) extended with business dimensions:

Latency → Customer experience by user tier

Traffic → Revenue-generating vs. non-revenue traffic

Errors → Impact on business operations vs. technical failures

Saturation → Capacity to handle peak business periods

Incident Response Integration

Automated Context Collection

When incidents occur, observability systems should automatically provide context:

Key Insight: Reduce mean time to context, not just mean time to detection.

Runbook Integration

Link observability data directly to remediation procedures:

- Symptom detected → Suggested investigation paths

- Pattern identified → Relevant runbook sections

- Context gathered → Automated remediation options

The Organizational Impact

Team Structure Evolution

Traditional Model: Dedicated monitoring team owns dashboards and alerts Observability Model: Every team owns their service's observability story

Platform Team Role:

- Provides observability infrastructure and standards

- Enables teams to implement observability patterns

- Maintains cross-service correlation capabilities

Service Team Role:

- Defines business-relevant metrics and SLOs

- Implements context-rich logging and tracing

- Owns incident response for their services

Cultural Transformation

Observability changes how teams think about system reliability:

From: "The system is working" vs. "The system is broken" To: "How well is the system serving business objectives?"

From: Reactive incident response To: Proactive reliability engineering

From: Technical metrics in isolation To: Business-impact correlation

Implementation Strategy

Phase 1: Foundation (Months 1-2)

- Establish the three pillars (metrics, logs, traces)

- Implement basic business-contextual instrumentation

- Define initial SLOs for critical user journeys

Phase 2: Intelligence (Months 3-4)

- Build correlation between observability signals

- Implement automated context collection

- Create audience-specific dashboards

Phase 3: Optimization (Months 5-6)

- Advanced alerting with business impact assessment

- Predictive reliability patterns

- Full incident response integration

Success Metrics

Technical Metrics:

- Mean time to resolution (target: <30 minutes)

- Alert signal-to-noise ratio (target: >80% actionable)

- Observability coverage (target: 95% of business transactions)

Business Metrics:

- Customer impact during incidents (target: <1% affected users)

- Development velocity (feature delivery should accelerate)

- Operational efficiency (reduced escalations to senior engineers)

The Strategic Outcome

From Cost Center to Competitive Advantage

When implemented strategically, observability transforms from an operational expense into a business differentiator:

Faster Innovation: Teams deploy confidently because they understand impact immediately

Superior Customer Experience: Issues are detected and resolved before customers notice

Operational Excellence: Engineers focus on building features, not fighting production fires

Data-Driven Decisions: Product and infrastructure choices based on real user impact

The Observability Maturity Model

Level 1 - Reactive: Basic monitoring, manual incident response Level 2 - Responsive: Automated alerting, structured debugging

Level 3 - Predictive: Proactive reliability, business-aligned SLOs Level 4 - Adaptive: Self-healing systems, continuous optimization

The Systems Architecture Advantage

The fundamental difference between basic monitoring and enterprise observability is systems thinking. Instead of collecting data points, you're designing intelligence systems that provide actionable insights.

This means:

- Thinking in stories: Data that explains what happened, why it happened, and what to do next

- Designing for correlation: Connecting metrics, logs, and traces to provide complete context

- Planning for incidents: Systems that accelerate mean time to resolution rather than just detection

- Building for business: Observability that translates technical metrics into business impact

- Optimizing for proaction: Moving from reactive firefighting to predictive reliability

When you apply these patterns, observability becomes a competitive advantage. Teams resolve incidents faster, prevent outages proactively, and make data-driven decisions about system evolution.

The result is infrastructure that teaches you how to run it better. And that's the difference between monitoring and architecture.

Next week in the DevOps Architect's Playbook: "Architecting Multi-Region Kubernetes Deployments"—exploring high availability patterns, cross-region networking, and disaster recovery strategies that keep systems running when entire regions fail.